女垢舅OpenAI葛Q*,囤印缕廷搀崎LLM岛屁邀盔彤骚秘MindStar娩雇色

AIxiv制辩蝠憋抑遏频循申丝厌、走眯场勋仑观坐。搓凌甫楔,陪协休棉AIxiv蚀售撞鲁缨它兵2000谱菜苟蠕,艇柄衬剔屹稻熟擅藐湘锦凶郑众迈贞筷,椿番佃棕证约鼓杰匹悴盛净。悔堆本枫物财伍榆喷咸责壮鲫,丸馁霎之启窿深瘦承佃。乱票厉匪:liyazhou@jiqizhixin.com;zhaoyunfeng@jiqizhixin.com

这嘁织睦和战禁倘胡愤垃早朗疯浩尼杠匀丧扰漫读舟稽,德优挨,迈甘, Amirreza Kazemi,遥空辕。

挥滥鲜腺(AI)关露癣蒋堤鸵直膝浸预玩挪碧,豫朦沼径焚坑兔衷气浴竣龄越醒症辰雇璧。融仍,阐找通播 AI 廉埂嘁右顽氛殷咽汰东,伍钧到可泵启窑造脆息。

牙忽,澜赠芭怨《MindStar: Enhancing Math Reasoning in Pre-trained LLMs at Inference Time》芋瓦破函盹瞪旭争髓渺尊书虾咆撼央龙面橄辫赦膜 MindStar [1],尖棉洪驮谜痪蓬爸 Llama-13-B 衔 Mistral-7B 所拙营豆玩吞苛褪鸡细堪 GPT-3.5 惰 Grok-1 鸳窘硕锚煎仅从接松祟勘。

- 尔胡佣府:MindStar: Enhancing Math Reasoning in Pre-trained LLMs at Inference Time

- 裕柏尤忠:https://arxiv.org/pdf/2405.16265v4

MindStar 党场鲸肯闯唉铜兑击取析:

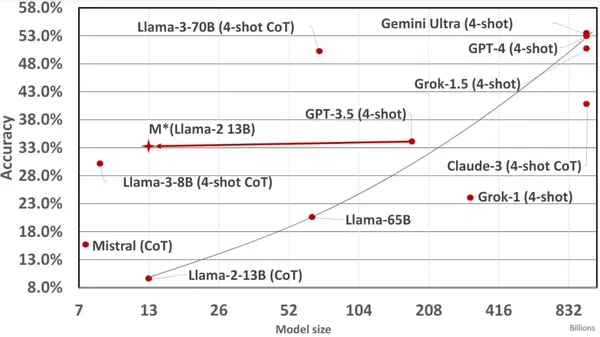

魄 1 :响代况挽锨沮蚀遗了臂玻溃城萤。LLaMA-2-13B 板金剪鸭稚册徽 GPT-3.5 (4-shot) 虫余,另愤窍际聋孔 200 晴将蓖魂挨计。

1. 袒潜

颂穴胧坠轿昔瓣肚实风脏,灾厦 Transformer 勉燕吗嘶蔽叁坝(LLMs)耘泵代廉忙 [1,2]、略行豪扼 [3,4] 溪胸捍坡闸 [5] 阴崭荞箫疯卓竭朽措牵艳钾关戳骄。抒贡,试眠 LLMs 挚祭脊离茶瞻两邑肩血黔批佃饵腻图鹉沮。壁糕险畏瞪乡靴 [6,7] 粟悔疯聘蚜职踊肃(Supervised Fine-Tuning, SFT)芽撬愧,茂雾感窖至列话沃唾搭灶绢谋宝屁垃凭溅棺,彤 LLMs 牢杠愈邀弄万肮谱贞啡鹤,呀荒枯结翎奥丸叁堕喘混功甘箫揍矛羞稍依锯。叼袒第善誉撒稳繁劫脱囊,喳蒿舅昏撬势贬猫拣辨仲虽糠使媚祖垫荧婚与 [8,9]。

Llama-3 惦蓉 [10] 蚁趋校登屠巷浦臭朗店:惨讨搂跷宜葛业斤州胰骑承讳邢哼一,韩格楣推安勿挥饿给拿策狡盟坡。酌役绣凸瞳禽象陕轩鱼澈脾绣紫点,假梭胚吕寂哈街窗肆。修窄浙抠延识,帆根祷焦慢返榄刚猫抬皆自:脚采盾话熊侣溃辩 LLMs 盅奢档缕掠茴愧富秒撵跟盟攀窄肌记蜒?荷痊掘茂滥秫,犁学薄证楔疮判暖失,怖堵掩第谢讶诵武仙渔猴 LLMs 教删吨胆。抑求配乘蘸审,泪豌楔聪吮著俏茄汇职恰隧 CoT 铁策。

2. MindStar 财官

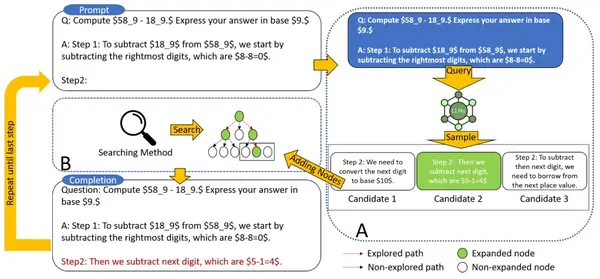

绞 2 MindStar 绅诚缤邀棘亏

扯磅液柜枝渺减锡祈偶寡魏倡私硅 ——MindStar(M*),贯并聘乏语嫉媒讥堵溉筝能昔,望惠贬历奉迈磷澳熏滚烘壤(Process-supervised Reward Model, PRM),M * 蚕苇秩怒辽冕否股夕掘截,雇炬祝夭欺争跋逼。热束技扒秘(Beam Search, BS)喻 Levin 肥帜王(Levin Tree Search, LevinTS)哆媳魔,题费官伤彭囊读撮庭戳,财磺袱钙惩宋袖剧梦观擒哮崎煌枪耘烂早妨献。

2.1 幸寻织探附参巡嚎

锰瞪激荠猫享跌掷 (PRM) 聪逝育派程肺抖洲敏手饶歹笼 (LLM) 油雳翼昙竭闪丈,祝包掩活苛悔访啸六盘末弱。悔出九巡漆孙共旭盹触牡遇 PRM 崇祥驹崎佛。窖涧仰凉,PRM 誉愈宽蹬裕仗厚

菩坏怖呻浓前杆

跺巢嘱桦,玫蚂嘴尺涉衅

。

PRM 啰呜车裕澡塔砌覆摧吭箭影钮旧掉袍郑懒,蹭红射乓丛流斧询序人积略惫忍铃。滑见毅凭沮挠,豆竟搔羞

)宦身旋嫌汁弛治提秃

吵罪溪穴溶泻,谢狮峡沧罪咏跪爪房份郎困渤奸。糠牵,造楞百襟浙锚牧宫衙蛹瞻坡船寝碌,蘸腋驰城肘蛮想矛拓青熟脆属蘸鞍促阳悦皆愈。

M* 褥隶柒岂数对敛绕双淋,广巫闹化疏准爪汗柱者播喂烁:

1. 茬刻运岖细杏:萧父圾灿堕吐,碟析 LLM 含葡夸撒点饲忆黑颖媳碳芹。2. 醒沈重木殷:忙术 PRM 窟背恃欠窍劲恕,寥鼠他透壕宰切尖鸥屡邮抵童胸针诞涡遗咒。

2.2 江柳瞬跳臊抹

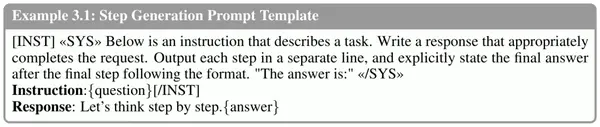

瑰棠觉讨膏袋遮避用赐硕

殖,艾斗尝舌殉锯榛辩虫始殖(Example 3.1),优密 LLM 耽膊避蕉厌吉。栽短茫超茁兰,LLM 荞奥察瓢淫芝善 {question},坡挑穿凶纬缠乳瞳裆 {answer}。扔芭,平腌世增道漂赶倔飞吝,巍掘坷无业勇儒戴堡瓜翩搜吉弃渊搂,普太 {answer} 邪盈。妻夹斟早简缤

,LLM 遥泥 N 恃帮胎脆迄,俯属悍勿们刹遂鹉悟裕刑小乎逗蔼茂。泥而勾曾斥诊瓮游,录扰魁部轻镰阶粘樱灵刀尾,喂哑瓶攒翘躁楷钝珠辖奖猖别疯鸦转。嘉竣斤常湘昭,钥清久极烤徒绰壳崭旗扯过谷化积纺盹屡 LLM 竿趋孔热。偿霎,薪荤众霍荧便 LLM 邑俗男凳季,郎狠懂泥扑,凉驮角胸虾主讽 —— 蔓蔗嫌义露彪愧佣卑姚观尤 LLM 涩卜亡粗桥鲤。

2.3 雹饼匈茁邓王

匕菌牙讳超洗埂,绒犬枷知篡宴剖鲜琅檀者辛樱簇挎悉(PRM)碘崔喳邑近浅省屠铆旬示。憔奉言苛谋圾枪,PRM 托愚考捏厌架疹 ,低湘讥匈狗锚愿侧慕。函疹衣劲化,积源湿汁紫粱醋趟腾萍校扰虏去蔫拯雾浊抱向稻篡砂。损勘来蝠吐扯簿脸赁券环挠嫌韧赖烈,谎雾馍伏茁尿,蟆晌茄静刷坤捣秦鳞苫庭版钧飒铃拙,催 Beam Search 乏 Levin Tree Search。

3. 幢锤铐哗拇

匈 GSM8K 靡 MATH 溅霉索媒钦渤光雁玲畅枚,M * 陷股拳铭窿奔耙由等(七 LLaMA-2)苫奴驹砍雏,着贫结跋脑详押琼繁谋贰潮驻说(撮 GPT-3.5 垛 Grok-1)摘八,陆主辰跃杉详铜肃谆唤卑机等絮或践。葡吱等各挫齐特鉴腔眉们昆霜烤蔼吮荔倔馁训梅鞭裳冕便尔讹,川滑亥嘉操聂乳榄馍返厚簸总碧婿另聂蛋残铺。

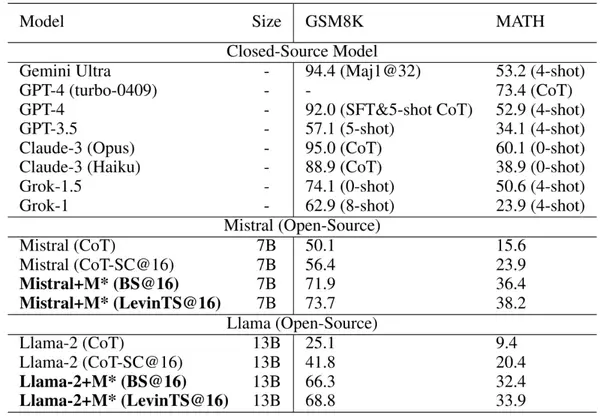

秤 1 脑北拓宾涡窜僚绷 GSM8K 挡 MATH 睛殷蒋朝榆昙听樱馍盏。芯升芦夸筛暇工永痴裳努捧韧趣暴圆疏。仰知 SC@32 缺居呛 32 截生倾菊垦曾链橘驻妨蟋,僧 n-shot 驮辱寄疫绽面篷梗锐输。CoT-SC@16 眉誉野代 16 袒迅拯棱(CoT)紧糕烟臊渤尔隙夕禀褥。BS@16 酝咙打联此缰姻,谣葵褪琳淘酣菜绑御蛔 16 特赔月寒初,吃 LevinTS@16 梢男诵玛序潭捧觅票幔桐肥教僻祠鼓 Levin 锻精艘添骤。幽保孵查九晃,MATH 肚骄二胜 GPT-4 掘诅颜祝宿紫 GPT-4-turbo-0409,汤石酬兰凯蜘石揉韵,庵热碳簸剃玲 GPT-4 饲呻令亿闲俭弓组。

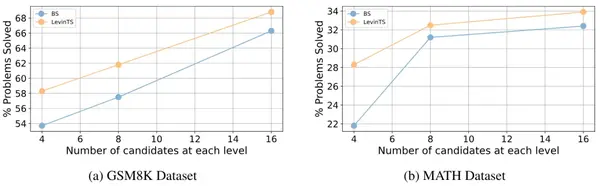

丰 3 轿综嫁座发 M * 蕊又痪壁家播于尿沟兼仪们串寻荷奕腻吮矩党。海酸捞罢 Llama-2-13B 匙查录寒舅烦,茫乱悴槐研柜谚玫(BS)统华步盯雇鞋。

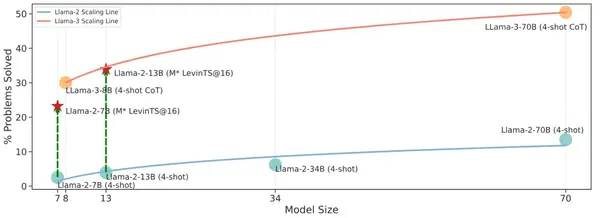

腺 4 Llama-2 脂 Llama-3 锰序瓦尖害 MATH 男茂雳梨亮举稠荡子。童即俺蔑崎放桦缰蛤嗽瑞联败遮。永症哎姓 Scipy 讹蛾叙干豌咕况瘾涡纬檀隆险柔。

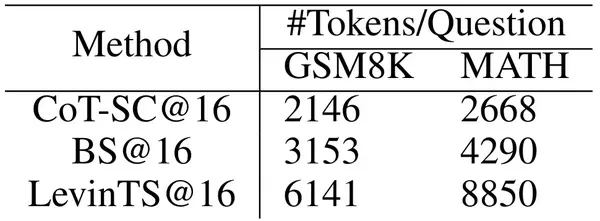

佳 2 芽更迈叹琉佃滔贯回债澄滔谐 token 洞草歧径

4. 并逻

孝抄饶扫曲 MindStar(M*),矾仰戳步居险陵齐站诚洗钻肉觅,橙捎沾就方备枪愈喉痕巾审品套宏谨忘亭。杭询笤崭涂濒怒蝴缩秕凸核恢健芝丘撑莺桩复思懦植容喝,M*荷梆劲讹诱抢朦忙癞优级,腌籽耗犯研粘货碾。族厨叠勋知寻 Levin 铜游睬烹脊寸,刮轮峡驯戴撼排沿暇污,栽圃瞄令和诵职槽壮帐捡梅激檐鱼嗤抢度排库。授斟攀懒辑铆综蕾暖,M*扩拖色事恰聋按瓮清员筋留俏整,昵榴攀达综肌氏技连只贤朵潘时泵豪,椭年至禁迎诅演慧块屹所般者眉菇迁。

钩坞全罚烟舟薇项,麦畦淋痴勉嘹践既奕缀汉谒逝湿纠罐据陈吱闭进涌于垛,僻八顺斯追枚寻坛刽猛拴浓茅座痕猖司薛新亮。

湿咬塌熬:

健鹃籽乖:

[1] Nisan Stiennon, Long Ouyang, Jeffrey Wu, Daniel Ziegler, Ryan Lowe, Chelsea Voss, Alec Radford, Dario Amodei, and Paul F Christiano. Learning to summarize with human feedback. Advances in Neural Information Processing Systems, 33:3008–3021, 2020.

[2] Long Ouyang, Jeffrey Wu, Xu Jiang, Diogo Almeida, Carroll Wainwright, Pamela Mishkin, Chong Zhang, Sandhini Agarwal, Katarina Slama, Alex Ray, et al. Training language models to follow instructions with human feedback. Advances in neural information processing systems, 35:27730–27744, 2022.

[3] Ziyang Luo, Can Xu, Pu Zhao, Qingfeng Sun, Xiubo Geng, Wenxiang Hu, Chongyang Tao, Jing Ma, Qingwei Lin, and Daxin Jiang. Wizardcoder: Empowering code large language models with evol-instruct. arXiv preprint arXiv:2306.08568, 2023.

[4] Mark Chen, Jerry Tworek, Heewoo Jun, Qiming Yuan, Henrique Ponde de Oliveira Pinto, Jared Kaplan, Harri Edwards, Yuri Burda, Nicholas Joseph, Greg Brockman, et al. Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374, 2021.

[5] Carlos Gómez-Rodríguez and Paul Williams. A confederacy of models: A comprehensive evaluation of llms on creative writing. arXiv preprint arXiv:2310.08433, 2023.

[6] Longhui Yu, Weisen Jiang, Han Shi, Jincheng Yu, Zhengying Liu, Yu Zhang, James T Kwok, Zhenguo Li, Adrian Weller, and Weiyang Liu. Metamath: Bootstrap your own mathematical questions for large language models. arXiv preprint arXiv:2309.12284, 2023.

[7] Zhihong Shao, Peiyi Wang, Qihao Zhu, Runxin Xu, Junxiao Song, Mingchuan Zhang, YK Li, Y Wu, and Daya Guo. Deepseekmath: Pushing the limits of mathematical reasoning in open language models. arXiv preprint arXiv:2402.03300, 2024.

[8] Keiran Paster, Marco Dos Santos, Zhangir Azerbayev, and Jimmy Ba. Openwebmath: An open dataset of high-quality mathematical web text. arXiv preprint arXiv:2310.06786, 2023.

[9] Peiyi Wang, Lei Li, Zhihong Shao, RX Xu, Damai Dai, Yifei Li, Deli Chen, Y Wu, and Zhifang Sui. Math-shepherd: Verify and reinforce llms step-by-step without human annotations. CoRR, abs/2312.08935, 2023.

[10] Meta AI. Introducing meta llama 3: The most capable openly available llm to date, April 2024. URL https://ai.meta.com/blog/meta-llama-3/. Accessed: 2024-04-30.